A testing time for testing: Assessment literacy as a force for social good in the time of coronavirus

“The one thing that is worse than no test is a bad test.” Professor Chris Whitty’s memorable phrase made news in late March 2020 during the early weeks of lockdown in the UK. His comment came at a time when the media, and the general public, were eagerly discussing a range of take-at-home antibody tests coming to market. The logic was clear: not all tests are reliable (i.e., sufficiently accurate), and not all tests are valid (i.e., appropriate for use). A decision based on faulty test evidence can lead to a worse outcome than if no test were available in the first place. Tests can grant a false sense of certainty. And false certainty in a high-stakes situation like medicine can have life-threatening consequences.

Since the early days of lockdown in the UK, we have seen other critical incidents involving testing and assessment in the education sector. In August 2020 a statistical algorithm was applied to school-based teacher assessments which had been gathered earlier in the year to determine A-Level results in the absence of final exam data. The negative impact of the algorithm’s use met with public outcry and the policy was reversed so that final results were instead based on the original teacher assessments. A similar problem with GCSE results was only narrowly avoided. Universities grappled with the decision of whether or not to hold in-person exams. And many professional tests moved to an online environment, with test security concerns addressed through remote proctoring.

Extraordinary times often call for extraordinary measures, and decisions made in haste or under pressure may not always lead to the best outcome. But how do policy makers, health professionals, educators, and the general public determine what is a good test and a fair assessment system in the first place? What specific knowledge and skills might be necessary to determine the quality and fairness of a test? People are comfortable with making broad judgements of fairness and justice in many areas in public life (consider the reaction to a football referee whose decisions are seen as biased towards one team or another). However, there is a curious lack of discussion around tests and testing processes. It’s curious because we encounter multiple testing regimes throughout our lives: school exams, driving tests, medical tests, job interviews, citizenship tests (for some), professional certification exams. Testing is everywhere and yet, apart from isolated examples (such as this year’s A-Level results), the more technical intricacies of testing are rarely scrutinised by the news media and rarely the topic of public discussion.

In our field of educational assessment, a recent research programme has sought to understand the nature of ‘assessment literacy’ – defined broadly as the skills, knowledge and abilities that different stakeholders (such as teachers, admissions officers, policymakers) need to carry out assessment-related activities. It is self-evident that certain groups need to know a lot about testing and assessment: those who create the tests, those who research the tests, and those who use the tests. But we would argue that there is real benefit in raising the general level of assessment literacy in society more broadly, among those whose lives are affected by tests and those who may need to decide between one test and another.

If this were to happen, what should people know? Research on assessment literacy offers different frameworks to conceptualise the core elements of assessment knowledge, but some common principles emerge as particularly important for a public understanding of assessment. They include:

- Reliability, or consistency of measurement

Systems of checks and moderation are required to counter a range of threats to measurement reliability.

- Validity, or understanding the nature of what is being measured

Tests can be misused, or re-used in a different context where they are inappropriate.

- Measurement uncertainty, or understanding that all measurement contains a degree of error

This is a fundamental consideration in interpreting and using any sort of test score; good tests try to minimise error while still remaining practical and useful.

- Interpretation of scores/results, or understanding the meaning of numbers or values

It is easy for us to invest numbers with meaning and power but we do not always appreciate the complexity underpinning a given value. The R number (virus reproduction number) is a case in point. While seemingly transparent (‘below 1 is good, above 1 is bad’), the R growth rate represents an average across very different epidemiological situations and should be considered a guide to the general trend rather than a description of the epidemic in local regions.

- The value of different types of tests and assessment, for instance, the relative merits of one-off, formal, end-of-school exams and centre/teacher-based assessments in educational settings

The former are typically considered more rigorous, and therefore the “best”, form of assessment, but the Covid19 situation highlighted the reality that high-stakes, end-of-school exams are high-risk endeavours if something goes wrong. This strengthens the case for greater emphasis on continuous assessment/assessment for learning/learning-oriented assessment, not only as a better approach pedagogically, but also a more risk-averse approach. Broadening public understanding of what testing and assessment can be, what forms they can take and how different approaches can be complementary rather than in competition with one another is essential. And when tests change (for instance, with University assessments moving online), new types of empirical evidence should be collected and provided.

- The distinction between different approaches to testing and assessment, for instance, norm-referenced assessment (NRA) versus criterion-referenced assessment (CRA), and what they mean

The ongoing debate about university grade inflation is a case in point. The number of distinction grades at university is increasing, but there is no clear reason why proportions should remain the same over time. Universities typically assess according to the principles of CRA, against standards of performance expressed through performance descriptors. If there is grade inflation, this could be investigated empirically. But the mere fact that there are more distinction level grades does not in itself provide evidence of a relaxation in standards, or grade ‘inflation’.

- The role and impact of technology in assessment, including its benefits and its limitations

The use of increasingly sophisticated, machine-based methods to calculate and report test results risks an increased distancing between the human designers of such systems and the outcomes of the assessment. We saw this with the A-Level results when many blamed the computational system, and the Prime Minister even referred to it as a “mutant algorithm”! Yet an algorithm does only what it is told to do, in this case by setting severe constraints on the proportion of each grade that would be allowed for each school/exam centre. Assessment literacy, therefore, intersects with other types of knowledge that are of increasing relevance in the digital age: statistical literacy, data literacy, algorithmic literacy, and computer literacy among others.

This year has seen close scrutiny of both the A-Level results and the outcomes from medical testing systems. This type of public debate around assessment matters is healthy for our society, in the same way that Parliamentary scrutiny is good for democracy. In theory, tests and exams contribute to a society where quality data is gathered to inform sound decision-making for the common good and where opportunities are awarded on the basis of talent, effort and achievement rather than wealth or social class. They appeal to a common-sense approval of rigour and high standards. But tests can also be used as a convenient political tool to show that something is being done, or to achieve policy objectives beyond what a test was originally designed to assess, so the role of tests within a socio-political landscape also needs to be understood.

Ultimately, a broadly knowledgeable public is the best way that those who use tests for high-stakes decisions can be held accountable to the principles of fairness and justice. Widening assessment literacy will only serve as a force for social good if those who take assessments, and are affected by them, are also able to question and think critically about the connection between the evidence collected by a test, the result and the ultimate decision.

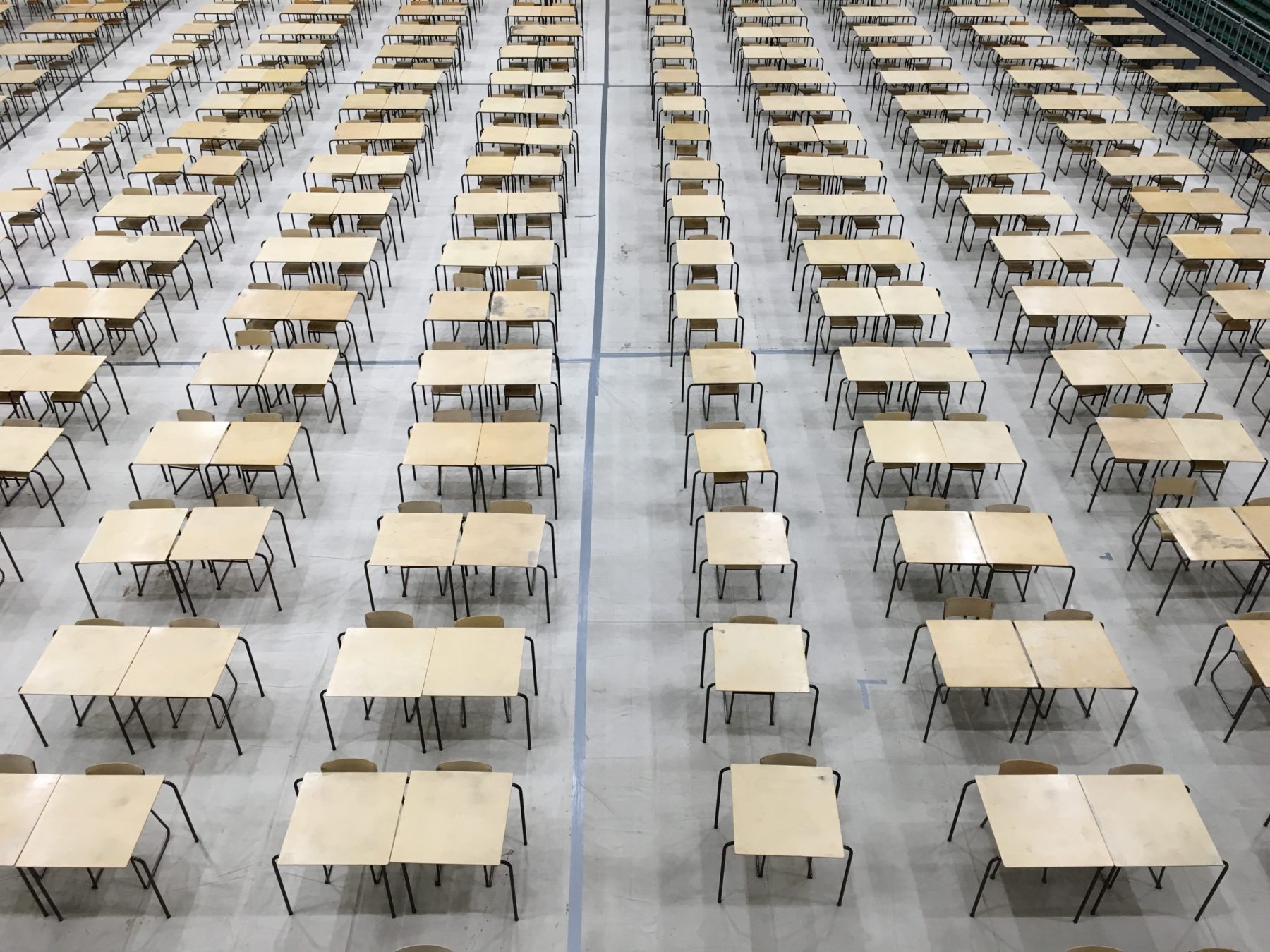

Photo Credit: Akshay Chauhan on Unsplash

About the authors

Lynda Taylor is Visiting Professor in English Language Assessment at the Centre for Research in English Language Learning and Assessment (CRELLA) at the University of Bedfordshire. She is also President of the UK Association of Language Testing and Assessment (UKALTA). Luke Harding is Professor in Linguistics and English Language at Lancaster University. He has expertise in areas including language assessment literacy and diagnostic language assessment.